The Statistics Wars and Their Casualties Videos & Slides from Sessions 1 & 2

Below are the videos and slides from the 6 talks from Session 1 and Session 2 of our workshop The Statistics Wars and Their Casualties held on September 22 & 23, 2022. Session 1 speakers were: Deborah Mayo (Virginia Tech), Richard Morey (Cardiff University), Stephen Senn (Edinburgh, Scotland). Session 2 speakers were: Daniël Lakens (Eindhoven University of Technology), Christian Hennig (University of Bologna), Yoav Benjamini (Tel Aviv University). Abstracts can be found here and the schedule here. Some participant related publications are on this page.

The final 2 sessions of our online workshop (Sessions 3 and 4) were held on Thursdays, Dec 1 and Dec 8, 2022 from 1500-1815 (London time) and 10am-1:15pm (New York City time), see list of speakers and link to videos at the end of this post.

SESSION 1

Brief Intro to Session 1 by David Hand (Imperial College)

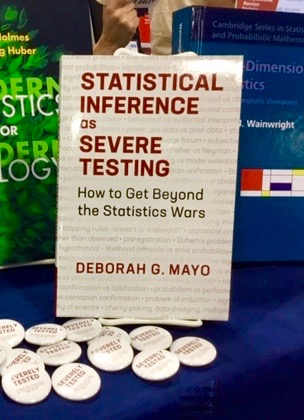

Deborah Mayo (Virginia Tech):

The Statistics Wars and Their Casualties

Richard Morey (Cardiff University)

Bayes factors, p values, and the replication crisis

Slide show is posted on his webpage here.

Stephen Senn (Edinburgh)

The replication crisis: are P-values the problem and are Bayes factors the solution?

Session 1 Discussion

SESSION 2

[Brief Intro to Session 2 by Stephen Senn (Edinburgh)]

Daniël Lakens (Eindhoven University of Technology)

The role of background assumptions in severity appraisal

Christian Hennig (University of Bologna)

On the interpretation of the mathematical characteristics of statistical tests

Yoav Benjamini (Tel Aviv University)

The two statistical cornerstones of replicability: addressing selective inference and irrelevant variability

Session 2 Discussion

SESSIONS #3 & #4

The videos and slides from the 7 talks from Session 3 and Session 4 of our workshop The Statistics Wars and Their Casualties held on December 1 & 8, 2022 can be found on this post. Session 3 speakers were: Daniele Fanelli (London School of Economics and Political Science), Stephan Guttinger (University of Exeter), and David Hand (Imperial College London). Session 4 speakers were: Jon Williamson (University of Kent), Margherita Harris (London School of Economics and Political Science), Aris Spanos (Virginia Tech), and Uri Simonsohn (Esade Ramon Llull University). Abstracts can be found here. In addition to the talks, you’ll find (1) a Recap of recaps at the beginning of Session 3 that provides a summary of Sessions 1 & 2, and (2) Mayo’s (5 minute) introduction to the final discussion: “Where do we go from here (Part ii)”at the end of Session 4.

Recent Comments