.

V. (June 18) The Statistics Wars and Their Casualties

Reading:

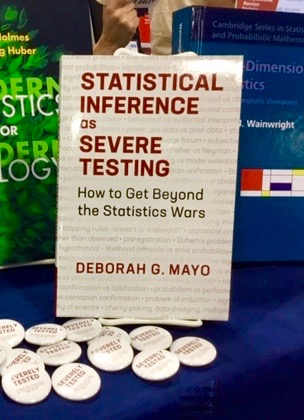

SIST: Excursion 4 Tour III: pp. 267-286; Farewell Keepsake pp. 436-444

-Amrhein, V., Greenland, S., & McShane, B., (2019). Comment: Retire Statistical Significance, Nature, 567: 305-308.

-Ioannidis J. (2019). “The Importance of Predefined Rules and Prespecified Statistical Analyses: Do Not Abandon Significance.” JAMA. 321(21): 2067–2068. doi:10.1001/jama.2019.4582

-Mayo, DG. (2019), P‐value thresholds: Forfeit at your peril. Eur J Clin Invest, 49: e13170. doi: 10.1111/eci.13170

Recommended (and fun) P-values on Trial: Selective Reporting of (Best Practice Guides Against) Selective Reporting

Mayo Memos for Meeting 5:

-Souvenirs Meeting 5: S: Preregistration and Error Probabilities, T: Even Big Data Calls for Theory and Falsification, Z: Understanding Tribal Warfare

–Bonus meeting: 25 June: See General Schedule

-Selected blogposts on Significance Test Wars from March 2019:

- March 25, 2019: “Diary for Statistical War Correspondents on the Latest Ban on Speech.”

- June 17, 2019: “The 2019 ASA Guide to P-values and Statistical Significance: Don’t Say What You Don’t Mean” (Some Recommendations)(ii)

- July 19, 2019: The NEJM Issues New Guidelines on Statistical Reporting: Is the ASA P-Value Project Backfiring? (i)

- November 4, 2019: On some Self-defeating aspects of the ASA’s 2019 recommendations of statistical significance tests

- November 14, 2019: The ASA’s P-value Project: Why it’s Doing More Harm than Good (cont from 11/4/19)

- November 30, 2019: P-Value Statements and Their Unintended(?) Consequences: The June 2019 ASA President’s Corner (b)

- December 13, 2019: “Les stats, c’est moi”: We take that step here! (Adopt our fav word of Phil Stat!) iii

-ASA 2016 Guide’s Six Principles (PDF)

Slides & Video Links for Meeting 5:

Slides: Draft Slides for 18 June (not beautiful)

Video Meeting 5: (Viewing in full screen mode helps with buffering issues.)

VIDEO LINK: https://wp.me/abBgTB-n8

1.If critics of tests have no faith in them, why do they look to them to determine whether your field is undergoing a replication crisis

2.In the case of test T+, Sev (~C) = 1 – SEV(C), does SEV obey the probability calculus?

3. how has or will covid science influence the Stat Wars?

1. because the “test” procedure leaves a lot of leeway to the analyst. They can do it well or badly. I have no faith in bad tests, and given the high prevalence of bad tests, I therefore have wobbly faith in any newly published test, but I have faith in good tests. I recall saying on twittr that we need to trust the “data vigilantes” or “methodological terrorists” to do their work well (and some have got into meta-meta-science recently about this) and someone compared me to a real life vigilante, the good guy with a gun. Well, they had a point. But I worked first on hospital quality indicators and I think about it in that sense: that we need an audit procedure with some teeth, and we have to accept that sometimes it will get it wrong with serious consequences for the wrongly accused; we just have to be a lot better than most, and constantly pushing for ever better standards.

2. I’m not sure what you mean by probability calculus…?

3. I said this at the time but I’ll put it here for future visitors: via public awareness of the fallibility of science, not just in a binary way where either doctor knows best or it’s all a conspiracy, but in a more nuanced way with real appreciation of study design and analytical traps. It’s going to be interesting when all the tech startups promising AI systems that will detect Sars-2 from people’s voices (or whatever) finally have to report to their shareholders.

Thanks for this seminar, another great conversation and opportunity to reflect on the foundations. I jotted this down and wonder if others find it useful as a summary regarding Bayesians or indeed whether they think I am quite wrong:

“Bayesian analysis has the supposed advantage, in terms of avoiding false claims, of putting all (or many) assumptions out in the open, but the disadvantage that biasing selection effects (multiplicity and such) are not accommodated in the numerical Bayes machinery. We can’t Bonferroni-adjust the posterior or the Bayes Factor, for example. Some people unfortunately take this to mean that there is no problem in Bayes. But instead we are delegating responsibility to the readers and experts.”

(To be clear, delegating responsibility to readers and experts is not a good move — it’s the problem with bad practice in p-values too, although there are at least some quantitative tools in that paradigm. And yes, you can do funny things with priors but I just don’t see how that is really a quantifiable intervention on the reliability of the resulting claims, even if there are numbers involved.)

I am not too sure about question 2. but I’ll give it a go anyway! (sorry if I am on the wrong track)

I take it that for SEV to obey the probability calculus SEV must be a measure on (Ω, F) which satisfies the probability axioms, where Ω is the set of all possible values of mu and F is the collection of all claims (mu> a and mu<a) that contains Ω and is closed under complement and under countable unions.

But I think both the second and third axioms are not satisfied since SEV(Ω) and SEV(¬C ∪ C) are undefined?

Margherita:

This came in just before our last seminar, so I hadn’t had time to study it. It’s an interesting question. I’m not sure why you say SEV(Ω) and SEV(¬C ∪ C) are undefined. I do agree that SEV doesn’t obey the probability calculus because both C and ~C can have low SEV. However, I wouldn’t be surprised if someone designed a way for it to accord with probability axioms. For one thing, recall that assigning a low SEV to claims with unknown severity was a convention. One can define a different convention.

Dear Professor Mayo,

Sorry for the late reply!

Although giving low SEV to claims with unknown severity is a convention, it definitely seems like a good one to me! So even though one might try to come up with a different convention so that SEV obeys the probability calculus, I think they would have a hard time defending it…

But besides this conventional aspect, the reason why I think that SEV(Ω) is undefined is that (using example on p.43) in order to compute the severity with which that claim (C: mu >153) passed test T with data x we had to evaluate the probability Pr (X ̅≤152; C is false). And in that case, we evaluated this probability at mu=153 because under this value for mu, claim C is false etc.. But if we consider the claim S: mu ∈ Ω , it is really not clear to me how to calculate the severity of this claim, because there is no value of mu for which claim S false! Perhaps I am missing something… but even if we can’t apply the same machinery to calculate the severity of claim S: mu∈ Ω we could perhaps just set SEV=1 in this case (by convention).

Dear Margherita:

Great to hear from you. Why isn’t mu ∈ Ω a tautology? (for the given problem)

Yes, indeed it is a tautology (for the given problem)! But although the probability of a tautology is arguably 1, why should this imply the severity of a tautology is also 1?

That’s not the reason I imagine giving, but rather something like this: everything has been done that could have found it false, yet did not find it so.

All: I just completed a new paper that I’ve been working on the last week, so I’m feeling a little lazy today, and I owe this group my weekly letter. I expect to have the next session on July 30 rather than the week before, that was a mistake. Richard Morey will present on Sept 24.

I notice that for the first time the color changes in the comments worked as I had intended in my exchange with Margherita just now, where my comment is in creme and it alternates. My regular blog manages the switch, but there is no way to ensure it on this blog. It varies. I guess it’s my art and design background that makes me care about such things.