The fifth meeting of our Phil Stat Forum*:

The Statistics Wars

and Their Casualties

28 January, 2021

TIME: 15:00-16:45 (London); 10-11:45 a.m. (New York, EST)

“How can we improve replicability?”

Alexander Bird

ABSTRACT: It is my view that the unthinking application of null hypothesis significance testing is a leading cause of a high rate of replication failure in certain fields. What can be done to address this, within the NHST framework?

Alexander Bird President, British Society for the Philosophy of Science, Bertrand Russell Professor, Department of Philosophy, University of Cambridge, Fellow and Director of Studies, St John’s College, Cambridge. Previously he was the Peter Sowerby Professor of Philosophy and Medicine, Department of Philosophy, King’s College London and prior to that held the chair in Philosophy at the University of Bristol, and was lecturer and then reader at the University of Edinburgh before that. His work is principally in those areas where philosophy of science overlaps with metaphysics and epistemology. He has a particular interest in the philosophy of medicine, especially regarding methodological issues in causal and statistical inference. Website: http://www.alexanderbird.org

For information about the Phil Stat Wars forum and how to join, click on this link.

Readings:

Bird, A. Understanding the Replication Crisis as a Base Rate Fallacy, The British Journal for the Philosophy of Science, axy051, (13 August 2018).

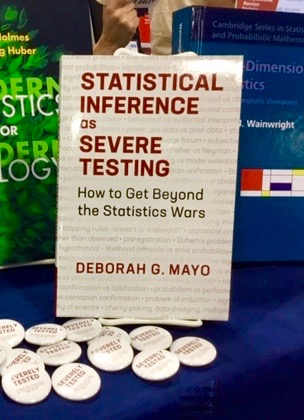

A few pages from D. Mayo: Statistical Inference as Severe Testing: How to get beyond the statistics wars (SIST), pp. 361-370: Section 5.6 “Positive Predictive Value: Fine for Luggage”.

Slides and Video Links: (to be posted when available)

Alexander Bird Presentation:

- Link to paste in browser: https://philstatwars.files.wordpress.com/2021/01/bird_presentation.mp4?_=1

Alexander Bird Discussion:

- Link to paste in browser: https://philstatwars.files.wordpress.com/2021/02/bird_discussion.mp4?_=1

Mayo’s Memos: Any info or events that arise that seem relevant to share with y’all before the meeting. Please check back closer to the meeting day.

*Meeting 13 of our the general Phil Stat series which began with the LSE Seminar PH500 on May 21

Please post comments and queries on the Bird presentation here.

Thank for your excellent talk and for identifying so clearly the issues surrounding poor reproducibility. I was unable to listen to your talk live and to ask questions at the time but listened a few days ago when the recording appeared.

I wonder whether the Havard medical school story reflects a misunderstanding caused by a failure to remind the ‘victims’ of the meaning of the epidemiological term ‘false positive’, which always sounds strange to clinicians. Perhaps they should have said instead:”the likelihood of a symptom conditional on the presence of a sufficient diagnostic criterion”. Medical students will be familiar from daily experience that the likelihood of a symptom in someone with a diagnosis is completely different from the probability of a diagnosis conditional on having a symptom and that this likelihood and probability are rarely the same.

Epidemiologists and clinicians often think differently and it is clinicians who ultimately decide the ‘true’ diagnosis for screening, often by using a range of different positive sufficient criteria; for which ‘false positive’ is a bizarre and logical impossibility. By analogy, a sufficient criterion for the true efficacy of a treatment would be represented by a treatment being at least better than placebo in a RCT with an infinite number of patients. After this confirmation, any prior probability of success is irrelevant as the probability of efficacy once the criterion is satisfied becomes certain by definition.

Because an infinite number of samples are impossible, we have to settle for an unbiased random sampling model where by definition the prior probability is uniform (if not then the sampling will be biased). Such bias can be created by P-hacking etc. and such violations have to be excluded as far as possible by severe testing of the methods. A prior probability of success would be provided by the result of a prior random sampling study by merging both study results in a meta-analytic way by finding the product of their likelihood distributions and normalising [e.g. see Figure 6 and the preceding section 3.8. entitled ‘Combining probability distributions using Bayes’ rule’: https://journals.plos.org/plosone/article?id=10.1371%2Fjournal.pone.0212302#pone-0212302-g006 ]. This prior sample can also be ‘imagined’ in a Bayesian manner and if done well (as by your Professor S), it will enhance the probability of the study being ‘replicated’ by the true result after an infinite number of observations.

It can be argued that idealistically (i.e. subject to successful severe testing) the latter probability of replication is 1-P (one sided) e.g. 1-0.025 = 0.975. It can also be argued that the probability of replicating the study when the number benefiting from treatment is again at least better than on placebo but with same number of observations is lower at 0.917. However, it can be further argued that the probability of replicating an initial study with a P value of 0.025 so that the P-value is no higher than 0.025 in a subsequent study with the same number observations is 0.283 (for details of these calculations, see: https://osf.io/j2wq8/). In other words the probability of replicating a study by repeating it with the same number of observations (or with less than an infinite number of observations) might give further impression of a replication crisis.

I therefore support your view that the apparent low rate of study replication may not only be due to poor science (which clearly can happen). I would be grateful for your views on my understanding of the mechanism of how a prior probability of success can affect such reproducibility and the contribution of attempting to replicate the study with small numbers of observations makes to poor apparent reproducibility.

Apologies. The 2nd sentence in the 2nd paragraph should have read: ‘Perhaps they should have said instead:”the likelihood of a symptom conditional on the ABSENCE of a sufficient diagnostic criterion”.